THE SECRET LIFE OF EQUALISERS: FILTER OR REVERB?

Every sound engineer loves equalisers. To repair signal quality issues, to treat individual signals to stand out or to blend in a mix, to shape the timbre, or just to make things sound different.

Most sound engineers think of equalisers as ideal tools to treat an audio signal in the frequency domain: ‘more highs’, ‘less mid’, ‘a touch of 4K’. In an ancient history we had to visualise the frequency domain in our mind and adjust the equalisers with rotary potentiometers, or use ‘graphic’ equalisers - comprising 15 or 30 equalisers on one box, visualising a limited frequency domain with small hardware faders in a row.

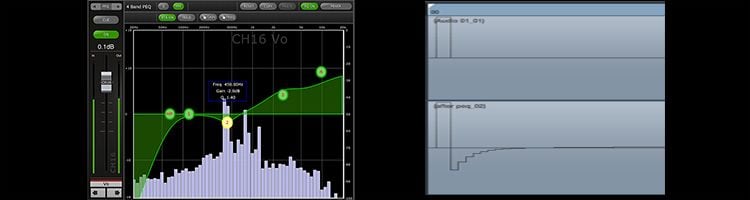

Modern mixing consoles do the visualisation for us, on touch displays that even allow parameters to be set by dragging peaks to the desired frequency/gain and pinching the width, displaying an FFT real time graphic analyser output in the background. Life is easy! But there’s a catch of course… and most sound engineers know that the ideal tool doesn’t exist.

The picture on the digital mixer display is a very useful frequency domain representation of the filters’ transfer function for continuous signals. But where there’s a frequency, it comes with ‘time’ – frequency and time are reciprocal to each other.

And

The figure displaying a filter’s response in the time domain is called the impulse response. And every filter has one. In this tutorial’s header picture, you can see the response of a simple band pass filter response; frequency domain on the left side, time domain on the right side. You can create one yourself by playing a test waveform through the filter and record the output signal with IR analysis software.

The impulse response shows that the filter distorts the waveform in time a little, moving energy from the precise timing of the input signal to other places on the timeline. It’s like a reverb algorithm – it’s true; an equaliser is also a reverb. The time span of the impulse response is under a millisecond, so we don’t perceive it as a reverb. But the timing of the sound changes, affecting spatial properties as ‘definition’, ‘directness’ and so forth – let’s call it ‘time distortion’. It’s the reason why classical music sound engineers try to solve timbre issues by spending lots of time to place microphones to get a good result, and prefer to apply as less as possible equalization to keep a maximum time accuracy in their recordings.

It’s not difficult to imagine this response if we think of digital filters. The IIR (Infinite Impulse Response) filters most commonly used in mixing consoles consist of multiple delay lines with feedback; the FIR (Finite Impulse Response) filters are often used in speaker processors use a fixed table of between ten and a few hundred taps – which is basically a sequence of delays. So, an equaliser’s response is very similar to a reverberation algorithm, only on a much smaller timescale.

But it’s not a major problem. There are many factors in an audio system that cause more ‘time distortion’. The most significant is a speaker; just study the impulse response - or the waterfall diagram - of even high-end speakers. Not to mention the acoustic environment where the sound is played back, where many objects create reflections. Better still, we often like what speakers and acoustics do to our audio!

It puts the secret life of the equaliser into perspective, arguing that it’s OK to see the equaliser as an ideal tool that only has effect in the frequency domain. But once you start to apply dozens of EQ bands, be ware of the secret life...